Prague

School.

media

ENG/RU

Five Simple Ways to Use It for Your Work and Research

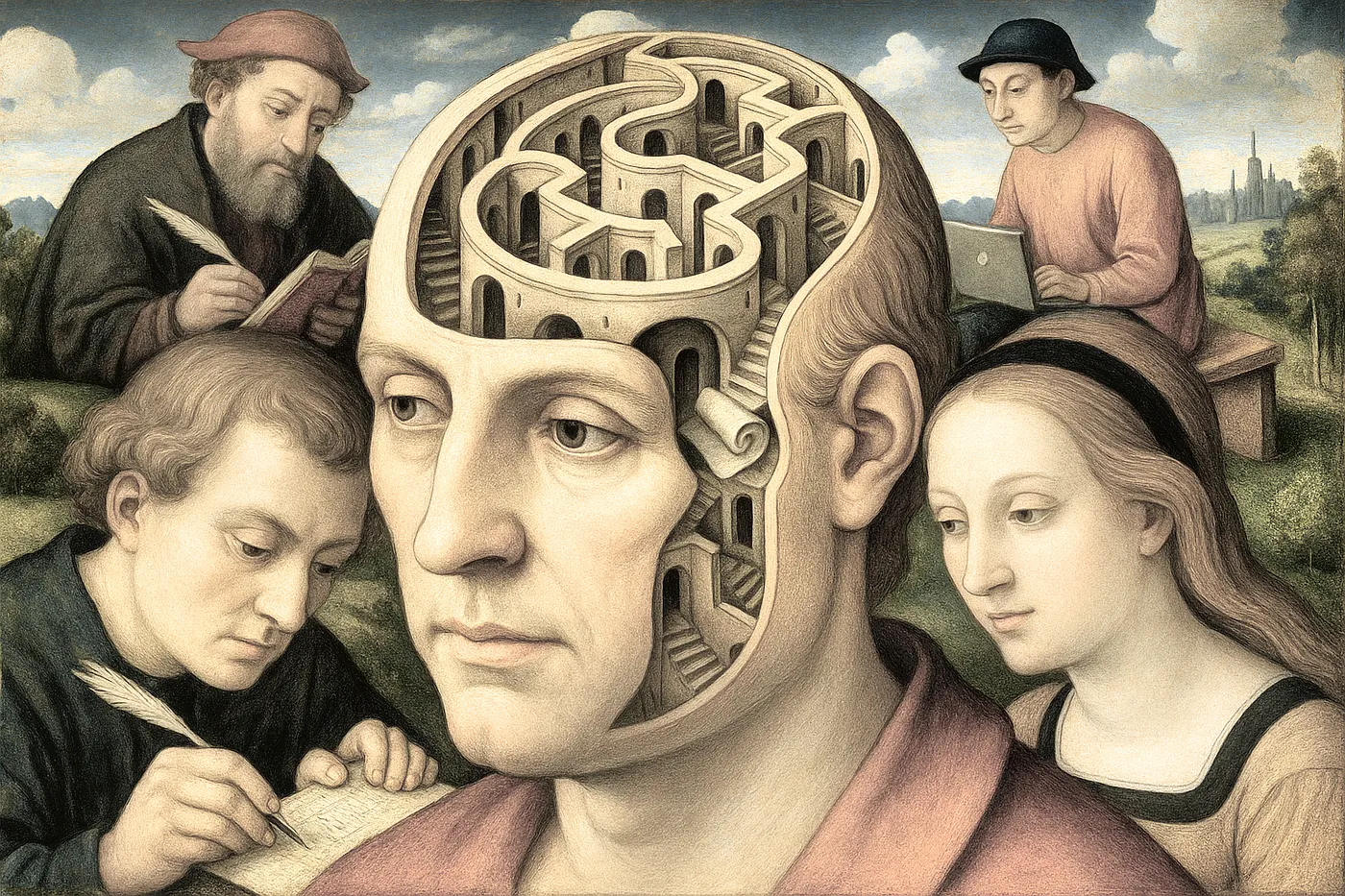

The updated GPT memory is not just a technical innovation. It’s a step toward what philosophers call extended cognition — the idea that our thinking processes can extend beyond the brain and partially “occur” in the external environment. A calculator we rely on for calculations is not just a tool, but part of our mental apparatus. The same applies to notebooks, calendar reminders, search engines, and even dialogues with other people that help us think.

This idea was formulated in the late 1990s by philosophers Andy Clark and David Chalmers. They proposed an approach that can be briefly summarized like this: if some external system participates in our thinking consistently and if it’s reliable enough for us to depend on it and expect that at the right moment it will provide us with certain information — then such a system becomes functionally indistinguishable from the part of consciousness that exists “in our head.” For example, if you always rely on a specific notebook (like Obsidian or Evernote) to remember dates or ideas, and use it just like your regular memory — this notebook can be considered part of your cognitive system.

With the emergence of the new GPT memory, this philosophical thesis is beginning to transform into practical reality. The new memory system, which appeared in spring 2025, is no longer limited to remembering facts about the user such as their name, occupation, or preferences for formatting bibliographic references. It tracks how you think, which topics constantly arise in dialogues with the model, which words, metaphors, and analogies you prefer, and how your thinking style changes. Now the model builds a map of your interests, ideas, blind spots — literally a map of consciousness, but external.

GPT builds a structured map of your interests — a graph where each node represents a topic, question, persistent metaphor, or way of reasoning, and the edges between them represent connections: recurrence, logical continuity, associative proximity. This forms an external model of how you think — with dense areas of interest, blind spots, and new offshoots. As a result, GPT can, among other things:

In effect, GPT with memory begins to play the role of an external thinking partner. The model captures what you might have forgotten, helps you see patterns and cycles in your thinking, notices what usually escapes attention. This is no longer just a conversational bot, but an external meta-memory — an intellectual companion that not only “remembers for you,” but sees connections you miss — and helps you think.

It’s clear that this functionality is especially useful for those who work with ideas: researchers, teachers, authors, editors, analysts. Below are five simple and useful recipes for using GPT’s extended memory.

GPT’s memory allows you to track how your thinking changes over time: what you began studying, what you returned to, which topics displaced others. If you ask the model to record this weekly or monthly, it can show not only a list of your most important topics but also something like a map of your “intellectual drift.”

Examples of questions for the model:

“What (historical) analogies do I use most often?”

“What metaphors constantly come up for me?”

“What topics do I no longer raise — and what has replaced them?”

“What questions do I somehow pose but hesitate to clarify?”

As a result, you’ll see your own epistemological habits: what you avoid, what you return to, what you leave unfinished.

Prompt for monthly report: Create a report: how have my topics, metaphors, analogies, and recurring questions changed over the past month? What has disappeared, what has returned?

GPT with memory can not only answer the questions asked but also point to the unasked ones. That is — topics that logically follow from your interests but haven’t yet entered your circle of attention.

Examples of questions for the model:

Using our previous dialogues, identify 10 areas/topics not yet touched upon that I would likely benefit from exploring

What key concepts are missing from my mental map on topic N?

As a result, you get a description of your external cognitive perimeter — i.e., areas adjacent to your interests but not yet in your field of vision. This is useful for everyone, but especially for those working in rapidly changing or interdisciplinary fields, where an unasked question can be more important than an asked one.

Prompt for monthly report: Name 10 topics that logically follow from our dialogues over the past month but weren’t touched upon in any of them.

You can task the model with keeping a shadow journal of your research activity. Everything you read, think about, upload, or discuss can be analyzed at the level of connections you haven’t yet formulated. The model will record intersections, gaps, and side branches that haven’t yet become part of your main logic.

If, for instance, you mentioned Foucault, samizdat, and neural networks at different times — GPT can show three intersections between these topics that you haven’t yet explored:

Circulation of power through distribution infrastructure — comparing the surveillance mechanism in late Foucault with samizdat as a shadow system of knowledge transfer and modern algorithmic filters in digital platforms (what reaches the reader and why).

Self-organization and subjectivation — exploring how in samizdat particular regimes of subjectivity were formed (the author as a figure of resistance) and how today’s neural network tools for editing, generation, and encryption create new forms of the “invisible subject” or anonymous co-authorship.

Censorship and access to knowledge as a technique of power — comparing manual practices of editing and limited access in samizdat with automated “censorship by default” operating in neural network environments through moderation, risk management, and language leveling.

This way, you can identify methodological failures, missing sources, parallel narratives — not from external literature, but from your own practice.

Prompt for monthly report: Using materials from our dialogues, files uploaded by me, texts read, and my notes for the month, provide the semantic connections, thematic intersections, and white spots you’ve noticed?

We tend to read authors who confirm our intuitions. The new functionality of GPT’s memory, with a map of your interests and preferences, can suggest reading material “against the current” — texts that will irritate and confuse you, not aligning with your habitual attitudes.

Examples of questions for the model:

Name five books that contradict the implicit assumptions contained in my questions.

Which authors would likely provoke outrage in me — but would be useful for me?

How do specialists from other disciplines evaluate the ideas I discuss — and which of them are criticized to the greatest extent?

This way, we create a situation of productive collision with what we usually filter out.

Prompt for monthly report: Compile a list of 5 books, authors, or approaches that contradict my current assumptions and habitual views.

GPT tends to politely and skillfully agree — but this, generally speaking, doesn’t necessarily have to be the case. You can ask the model to take on the role of an intellectual opponent, building arguments against your usual approach or speaking from the position of a school of thought foreign to you.

Example question for the model:

Analyze my current project from the perspective of a theory I haven’t used before — even if it’s hostile to me.

This creates a situation of internal dispute: the model imitates a view from the side, which is not always easy to model independently.

Prompt for monthly report: Write a short critical text (300–500 words) that refutes the key attitudes traced in my requests over the past month. Use the tone of an intelligent opponent.